2024. 1. 18. 08:04ㆍ코딩 도구/LG Aimers

LG Aimers: AI전문가과정 4차

Module 6. 『딥러닝(Deep Learning)』

ㅇ 교수 : KAIST 주재걸 교수

ㅇ 학습목표

Neural Networks의 한 종류인 딥러닝(Deep Learning)에 대한 기본 개념과 대표적인 모형들의 학습원리를 배우게 됩니다.

이미지와 언어모델 학습을 위한 딥러닝 모델과 학습원리를 배우게 됩니다.

Part 1. Introduction to Deep Neural Networks

-Artificial Neural Networks

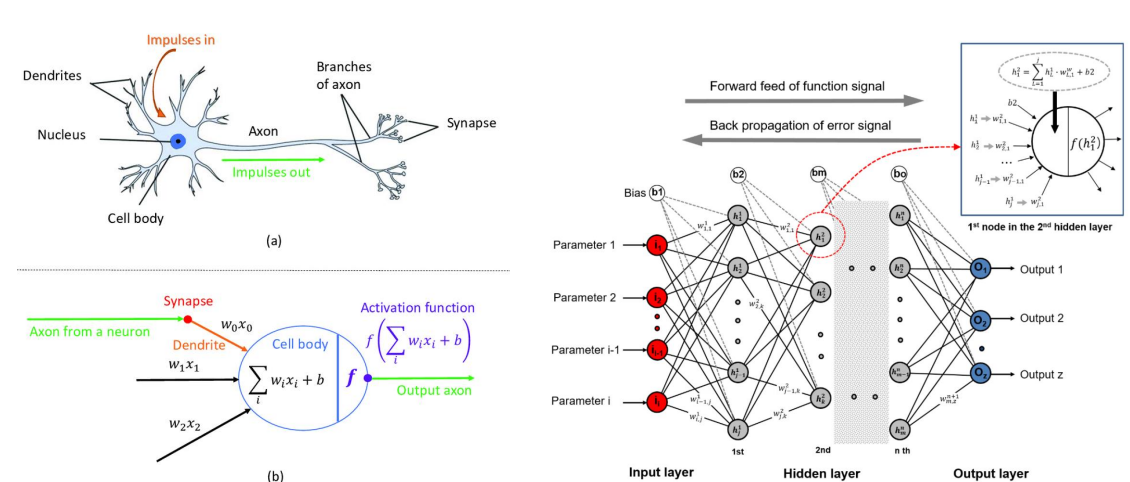

• A technology that imitates neurons existing in the human brain

-Deep Neural Network (DNN)

• DNN improves accuracy of AI technology by stacking neural network layers

"Non-deep" feedforward neural network는

input layer -> hidden layer -> output layer 순으로 구성되어있고,

Deep Neural Network (DNN)는 예를 들면 input layer -> hidden layer -> hidden layer -> hidden layer ->output layer 이런식으로 layer들이 입출력 사이에 있다.

-Reason Why Deep Learning has been Successful

3박자가 잘 갖춰짐.

Big Data, GPU Acceleration, Algorithm Improvements

-Applications of Deep Learning

Computer Vision

(Object Detection, Image Synthesis)

Natural Language Processing

(Machine Translation, Mail Classification)

Time-Series Analysis

(Stock Price Predction, Speech Recognition & Synthesis)

Reinforcement Learning

(AlphaGo, Atari Gane)

-Perceptron and Neural Networks

-What is Perceptron?

Perceptron

• One kind of neural networks

• Frank Rosenblatt devised in 1957

• Linear classifier

• Similar with structure of a neuron

-Multi-Layer Perceptron for XOR Gate

Is it possible to solve a XOR problem using a single layer perceptron?

→ No. Single layer perceptron can only solve linear problem. XOR problem is non-linear

-Multi-Layer Perceptron

But if we use two-layer perceptron, we can solve XOR problem

→ This model is called multi-layer perceptron

-Tensorflow Playground

https://playground.tensorflow.org/

-Forward Propagation

• 𝑎𝑗(i)

: “Activation” of the 𝑖-th unit in the 𝑗-th layer

• 𝑊 (j)

: “Weight Matrix” mapping from the 𝑗-th layer to the (𝑗 + 1)-th layer

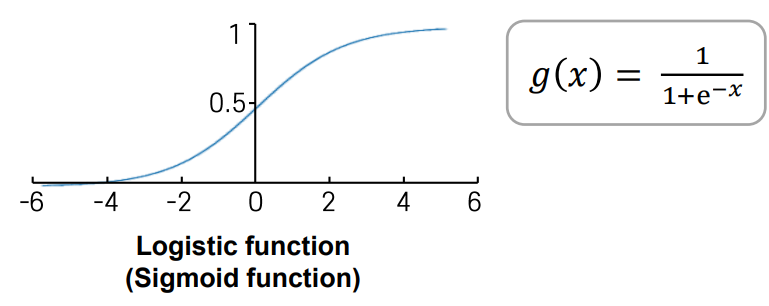

-Sigmoid function

= Logistic function

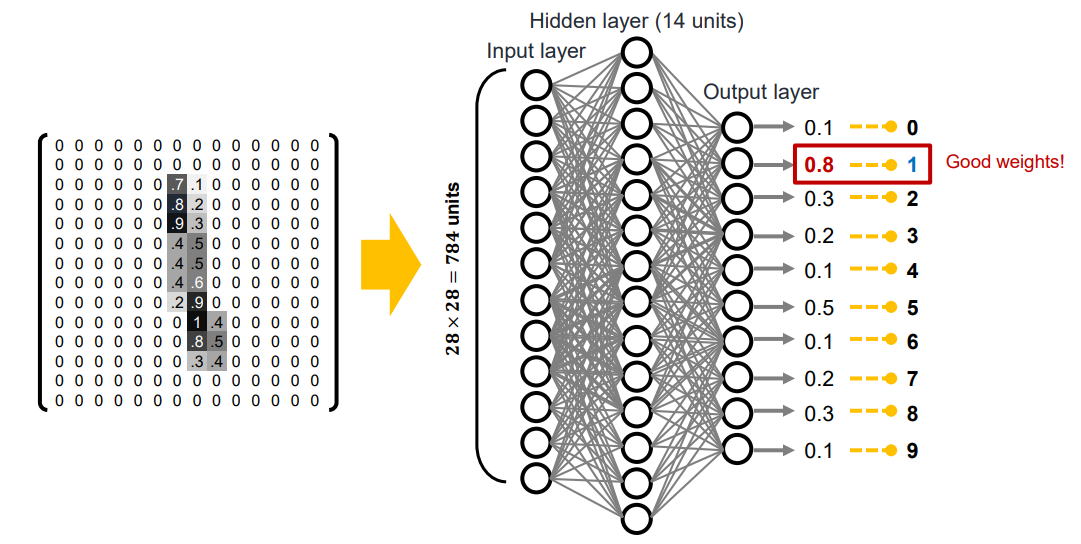

-MNIST Dataset

MNIST

(Modified National Institute of Standards and Technology)

Handwritten digits from 0 to 9

• 55,000 training examples

• 10,000 testing examples

Each image has been preprocessed

• Digits are center-aligned

• Digit size is rescaled to similar size

• Each image has fixed size of 28 × 28

→ Real number matrix from 0.0 to 1.0

-MNIST Classification Model

+ 아래 사진도 참고!

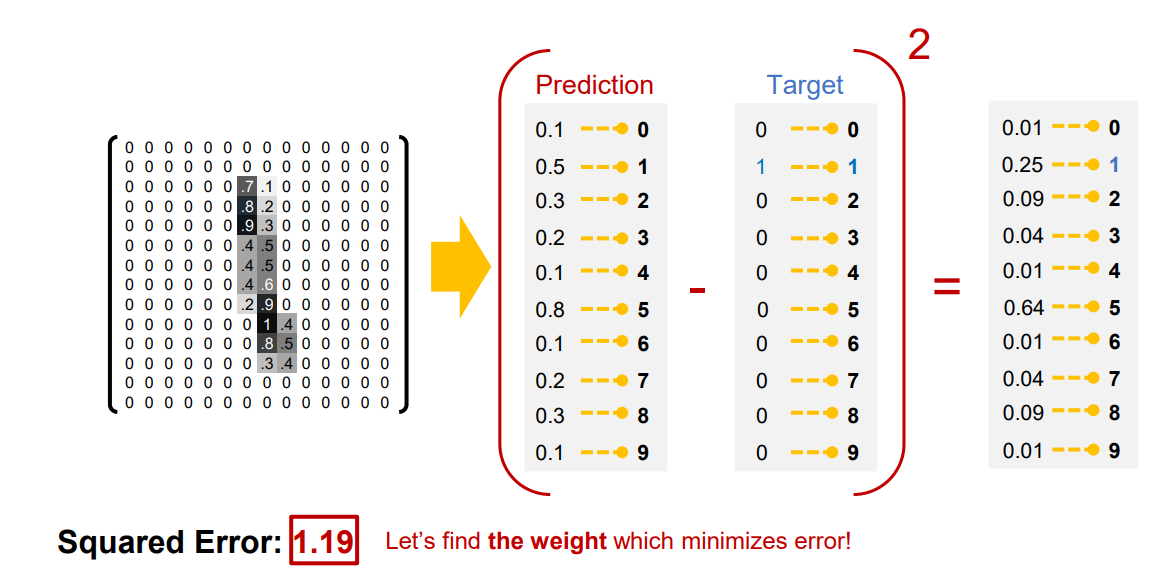

-Softmax Layer (Softmax Classifier)

• Because of sigmoid outputs, Prediction ∈ 0,1 & Target ∈ 0,1

→ Upper limits exist on loss and gradient magnitude with MSE Loss

• In addition, a better output would be a sum-to-one probability vector over multiple possible classes.

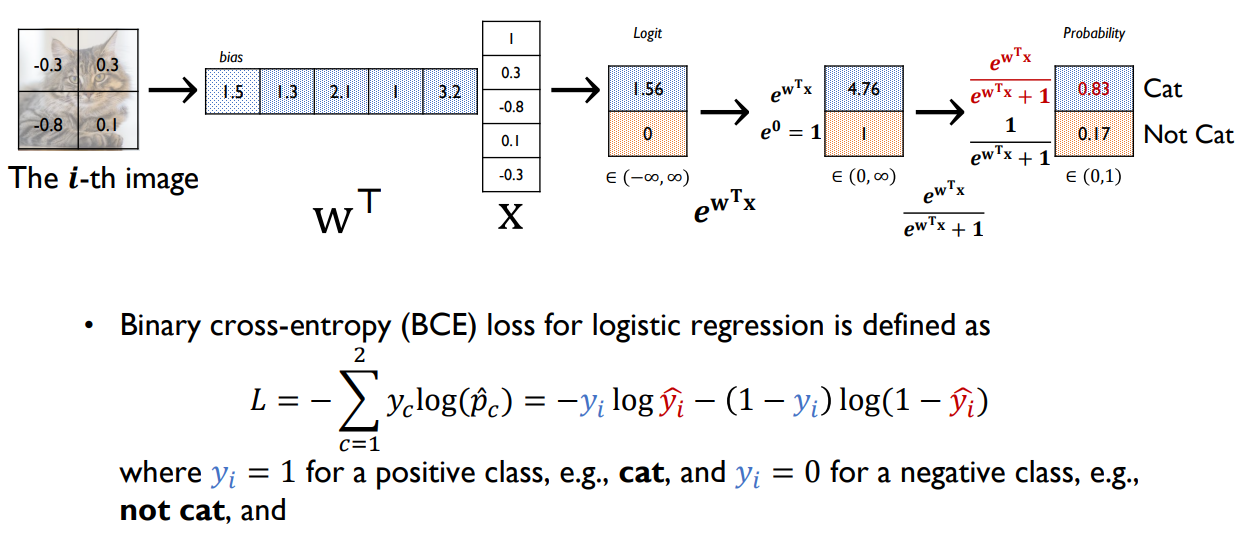

-Logistic Regression as a Special Case of Softmax Classifier

'코딩 도구 > LG Aimers' 카테고리의 다른 글

| LG Aimers 4기 Convolutional Neural Networks and Image Classification (5) | 2024.01.20 |

|---|---|

| LG Aimers 4기 Training Neural Networks (3) | 2024.01.19 |

| LG Aimers 4기 Phase 1 온라인 교육 후기 (0) | 2024.01.17 |

| LG Aimers 4기 인과추론의 다양한 연구 방향 (0) | 2024.01.17 |

| LG Aimers 4기 인과추론 수행을 위한 기본 방법론 (0) | 2024.01.16 |